Mapping robot

·Robot that drives around and creates a map of the environment.

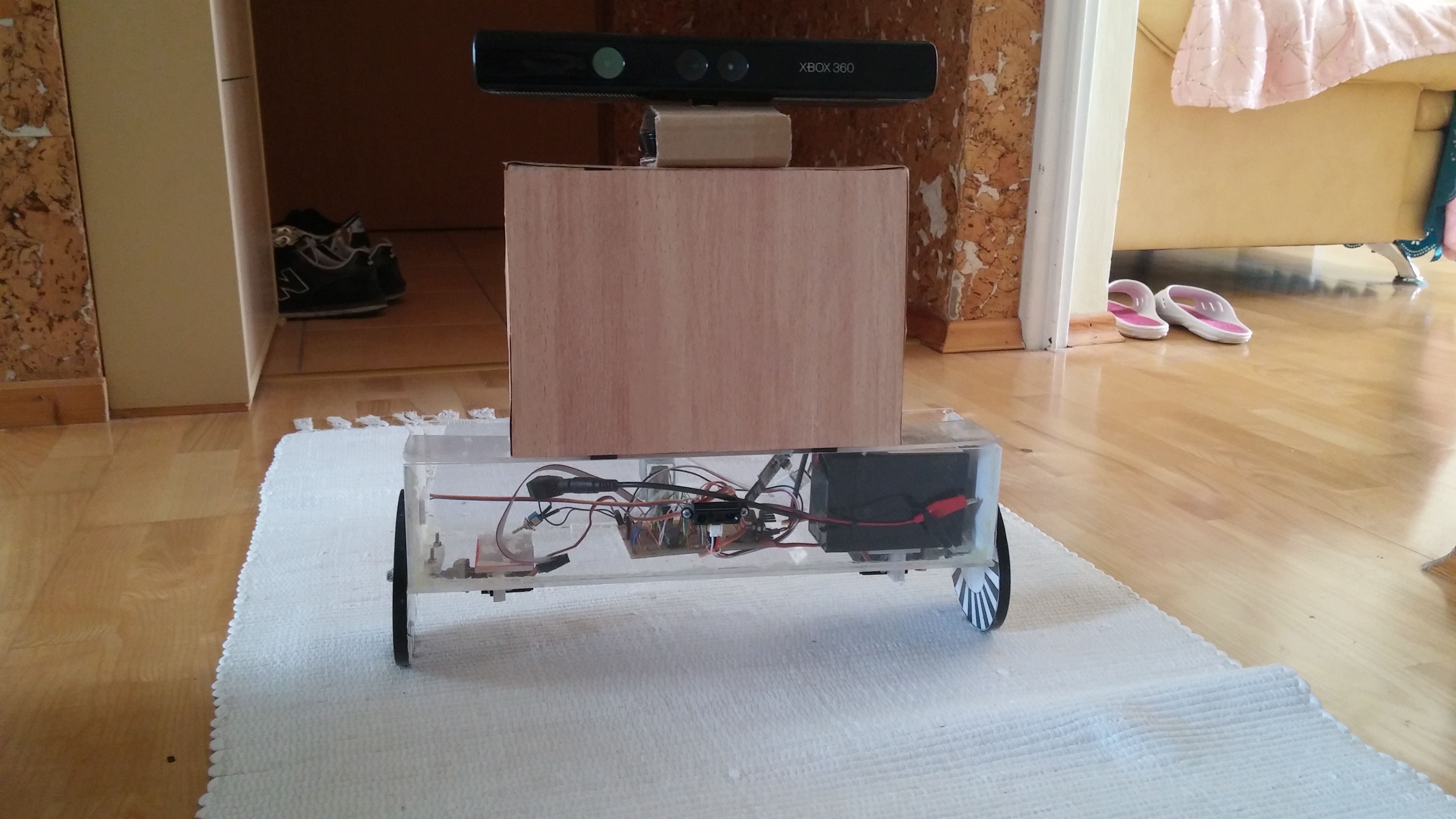

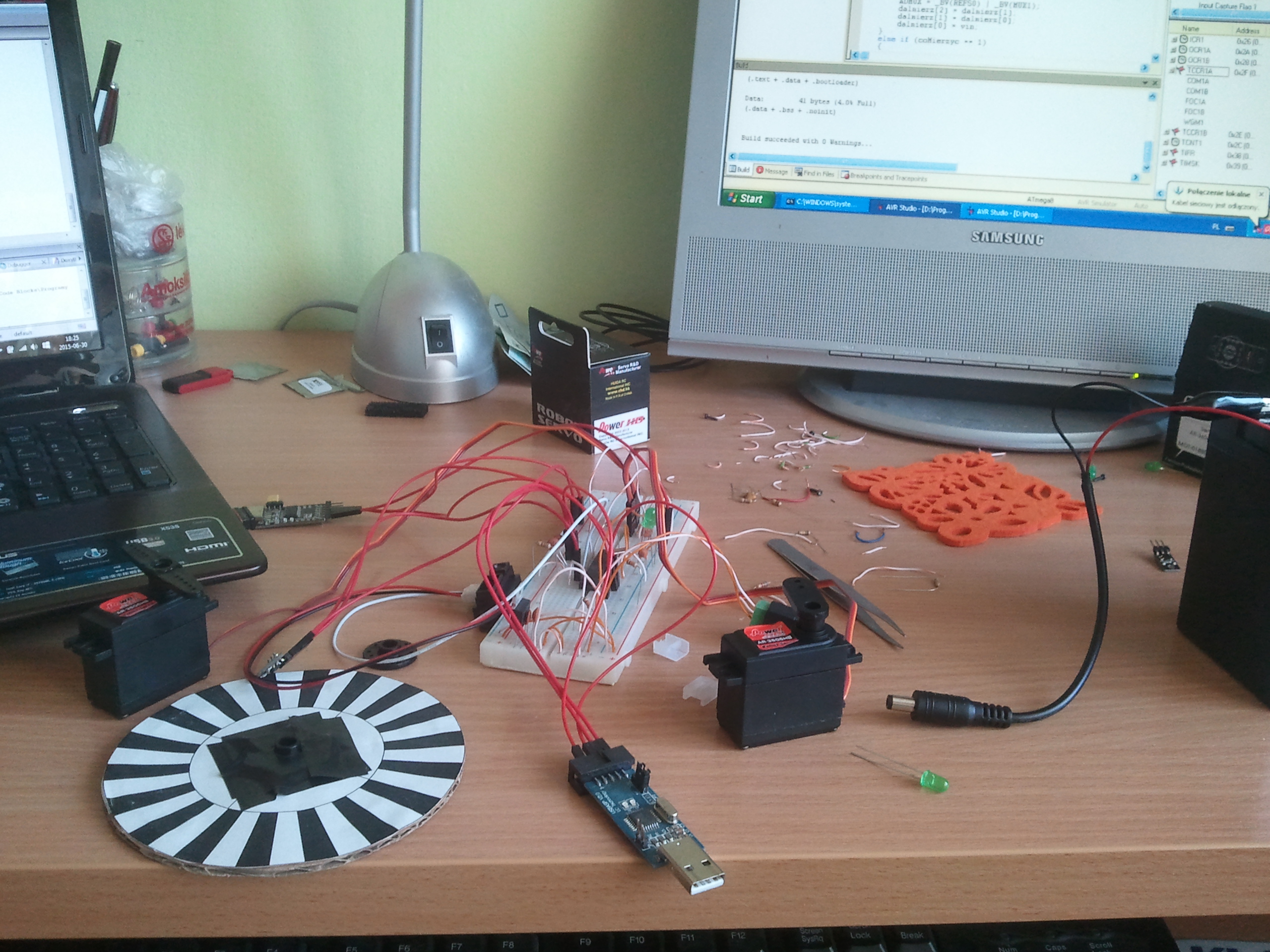

This robot was a simplified version of a previously created general-purpose robot. In this construction I simplified requirements and focused only on mapping and navigation. Instead of stereo vision I decided to use a Kinect sensor because results achieved previously with only cameras weren’t satisfactory. I also added custom-made wheel encoders based on optocouplers and black and white patterns on wheels.

On this robot I implemented a PID controller for driving up to the point:

To create a map of the environment first it was necessary to remove floor points from data from Kinect. I tested two approaches - fitting the plane using the RANSAC algorithm and the UV-disparity method (which required lower computational load - instead of plane line was fitted).

Then obstacle points were projected onto grid map, robot position was calculated using wheel encoders.

Kinect Mapping Github repository

Stereo Vision Mapping Github repository

I also tried to run a SLAM algorithm to correct errors from encoders but wasn’t able to run any available implementation on Windows (which I was using at that time).

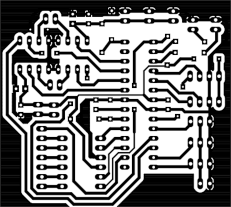

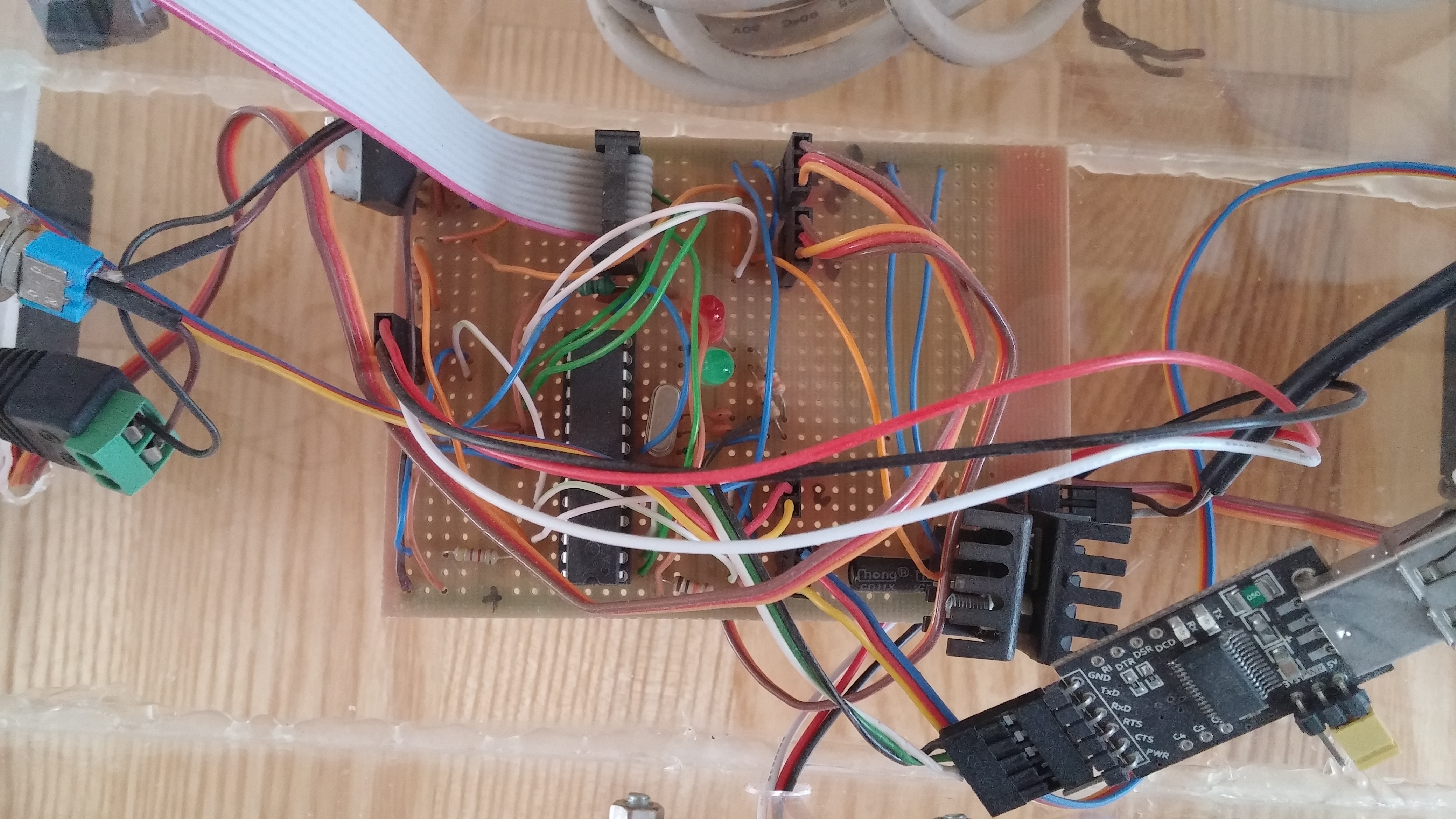

To control the robot I used an Atmega88Pa microcontroller, which was connected using a USB-UART converter to the computer. It controlled motors (continuous rotation servos) and measured optocouplers voltage to detect wheel rotations. Additionally, I used an infrared proximity sensor. As a power source, I used a 6V gel battery, voltage on battery was measured and monitored by MCU. A 12V Step-Up voltage converter was used to power Kinect.